In this post, we’ll be looking at how to use the Infrahub MCP server. But, before we get there, we’ll go through some background on the Model Context Protocol (MCP) itself, show a simple example to explain how it works, and then connect it back to Infrahub. This will give us the basics before moving on to the Infrahub-specific setup. Here’s what we’ll cover:

- A quick background on what MCP is and why it’s needed

- A simple example of an MCP server to show how it works

- How to connect an MCP server to host applications like Claude Desktop and Cursor

- Setting up and using the Infrahub MCP server

- Example use cases where the Infrahub MCP server can help in real workflows

Disclaimer – OpsMill has partnered with me for this post, and they also support my blog as a sponsor. The post is originally published under https://opsmill.com/blog/getting-started-infrahub-mcp-server/

What is Model Context Protocol (MCP)?

If you're doing anything with AI (and honestly, who isn’t these days), you’ve probably heard of Model Context Protocol, or MCP. Anthropic introduced MCP in November 2024, which means it hasn’t been around for long and is still evolving quickly.

MCP is a communication layer that allows large language models (LLMs) to talk to other external programs. This extends what the model can do and helps it perform tasks it normally wouldn’t be good at on its own. Instead of just generating text, the model can interact with tools, fetch data, run commands, or integrate with existing systems.

The nice part is that MCP standardizes this communication. That means you don’t have to build a custom bridge every time you want your model to work with a different system. It creates a cleaner way to connect AI with the rest of your stack, whether that’s automation, networking, or infrastructure tools.

What’s interesting is that the official definition of MCP actually compares it to a USB-C port. Just as USB-C gives you a single, standardized way to connect your devices to many different peripherals and accessories, MCP provides a standardized way to connect AI models to different data sources and tools.

Without a standard, every connection would require a custom adapter or integration. With MCP, the model doesn’t need to know the details of every system it connects to; it just uses the protocol. That consistency is what makes it powerful, especially as more tools and servers start supporting MCP.

MCP - The Missing Piece for LLMs

We all know LLMs are great at processing natural language, but they have no built-in way of connecting to our systems. For example, if I ask an LLM what Infrahub is or how to use it, it will most likely give me a decent explanation. But if I ask it to interact with our own Infrahub instance that’s hosted internally, it has no idea what to do.

Of course, we could write custom code that shows the LLM how to talk to Infrahub or any other tool, and that might work. The problem is that if we later switch to another application or add a new tool, we’d need to build that integration all over again.

This is exactly where MCP helps. With MCP, we can give our LLM access to traditional programs through a standard protocol. Instead of writing custom code each time, the LLM just follows the agreed-upon standard for how it communicates with external tools. This makes the setup reusable, flexible, and much easier to maintain.

MCP components

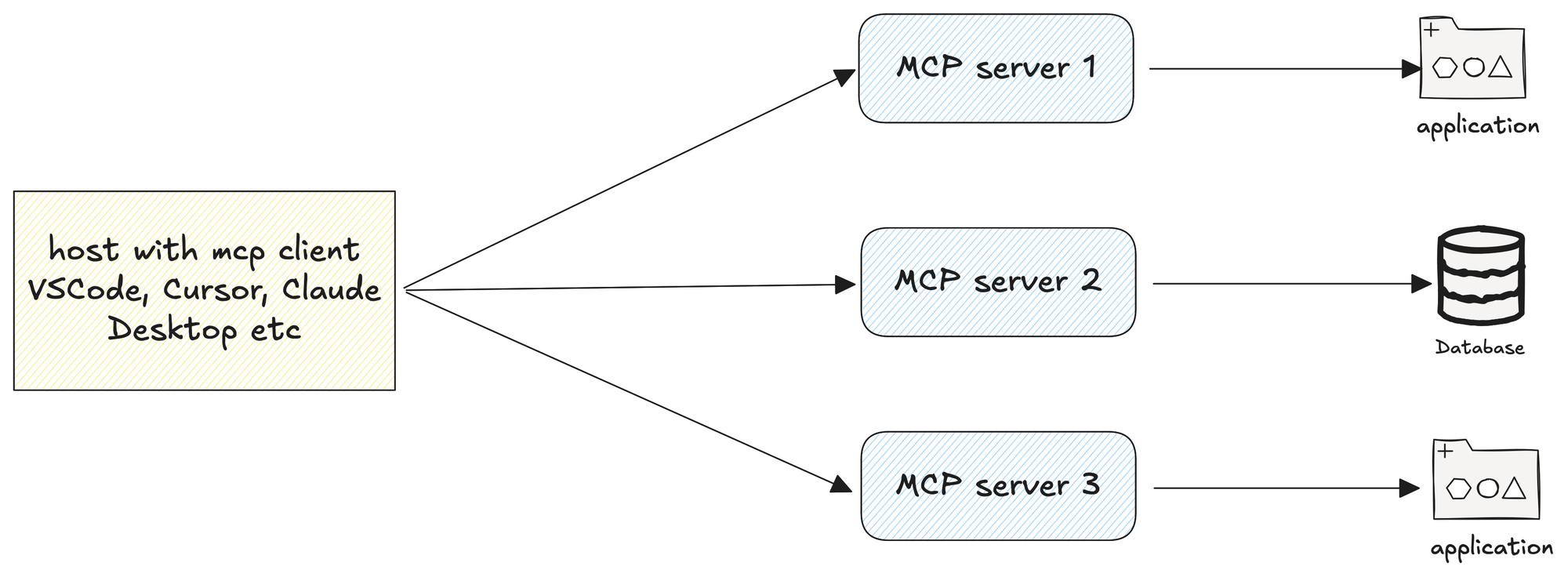

MCP is built around three main components.

- The host application

- MCP Client

- MCP Server

- The host application is usually the interface you interact with, like your AI assistant or chat app (Claude Desktop, VSCode, Cursor, etc). It’s the place where you type your prompts and get answers. The host manages the conversation and makes sure the model has access to the right context.

- The client sits inside the host application. Its job is to speak the MCP language and handle communication with servers. You can think of it as the adapter that connects the host to external tools.

- The server is the actual tool you want the AI to connect to. For example, it could be a database, a monitoring system, or an automation tool. The server exposes capabilities through MCP, allowing the model to use them.

A simple MCP server example

To understand how MCP works and how to create an MCP server, let’s look at a simple example. Let’s say you want to add two numbers. Would you trust your LLM to add them if this is financial, or do you need the answer to be accurate all of the time? LLMs are getting much better at math, but a calculator or a dedicated program is still going to be more reliable right now.

So, how do we tell the LLM to use an external application to add numbers? Without a standard, we would have to write custom integration code for every tool and every host application, which quickly becomes difficult to maintain.

With MCP, we don’t need to do that. The server exposes tools in a standard way, the client handles communication, and the host application calls the right tool when the LLM requests it. This keeps the process consistent and reusable across different applications.

Here’s a minimal server that exposes a simple function which takes two numbers, adds them, and returns the answer. You can save this Python file anywhere on your machine. Just remember the directory, because you’ll need to point to it when configuring the MCP server later.

# server.py

from fastmcp import FastMCP

mcp = FastMCP("Demo")

@mcp.tool

def add(a: int, b: int) -> int:

"""Add two numbers"""

return a + bThe code above shows a minimal MCP server. We start by importing FastMCP, which is a lightweight Python library for building MCP servers. It takes care of all the boilerplate around the protocol, so we only need to focus on exposing the functions we want our LLM to use.

To use the fastmcp library, we first need to install it. The recommended way is with uv, which is a fast Python package installer and virtual environment manager. You can think of it as a modern replacement for pip that’s optimized for speed. Installing FastMCP with uv is as simple as running the uv add command.

uv add fastmcpOnce installed, we create a server named Demo and define a simple tool called add that takes two integers and returns their sum. The @mcp.tool decorator registers this function as an MCP tool, which means the LLM can call it through the protocol.

We also include Type Hints, which make it clear that the function takes two integers and returns an integer. This way, the LLM knows exactly what to pass in and what to expect back. The short docstring is also required because it tells the model in plain language what the tool does, so it can choose the right tool when needed.

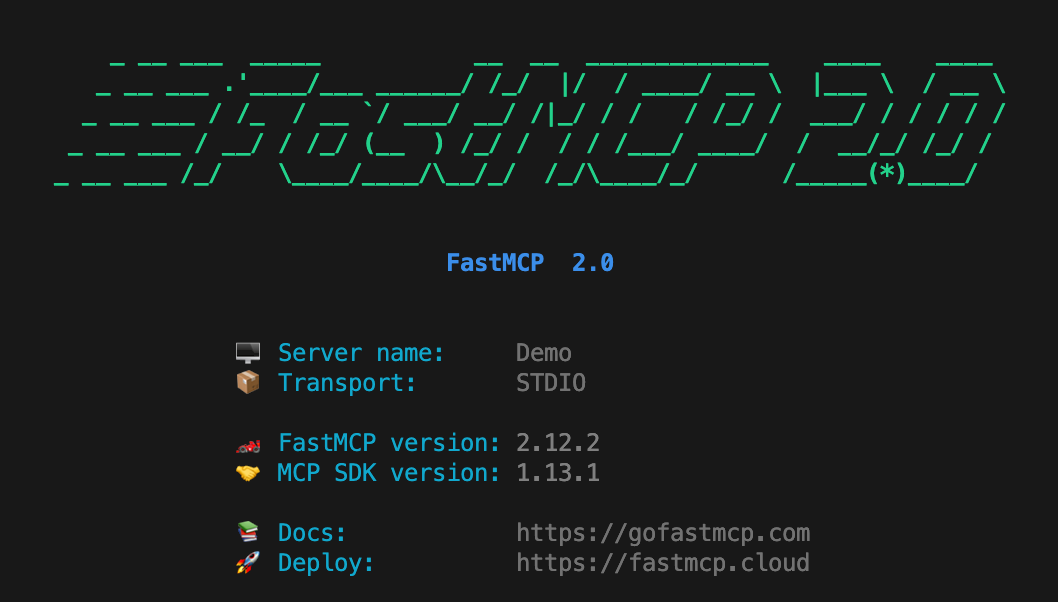

Once everything is in place, you can test the MCP server by running uv run fastmcp run server.py

When the server starts, you’ll notice in the output that the transport is set to STDIO. This means the server communicates over standard input and output streams. STDIO is the simplest transport option and is often used for local development and testing because it doesn’t require setting up networking.

MCP also supports Streamable HTTP transport, where the server operates as an independent process that can handle multiple client connections.

Using the MCP server in Claude Desktop

Now that we have a simple MCP server (and note that you don’t need to keep the server running all the time), the next step is to tell the AI application, or the host, how to use it.

Several applications support MCP servers, but in this post, we’ll focus on Claude Desktop and Cursor. Make sure you have one of these installed so you can follow along. You don’t need a paid plan to use them, but you may need to create a free account before getting started.

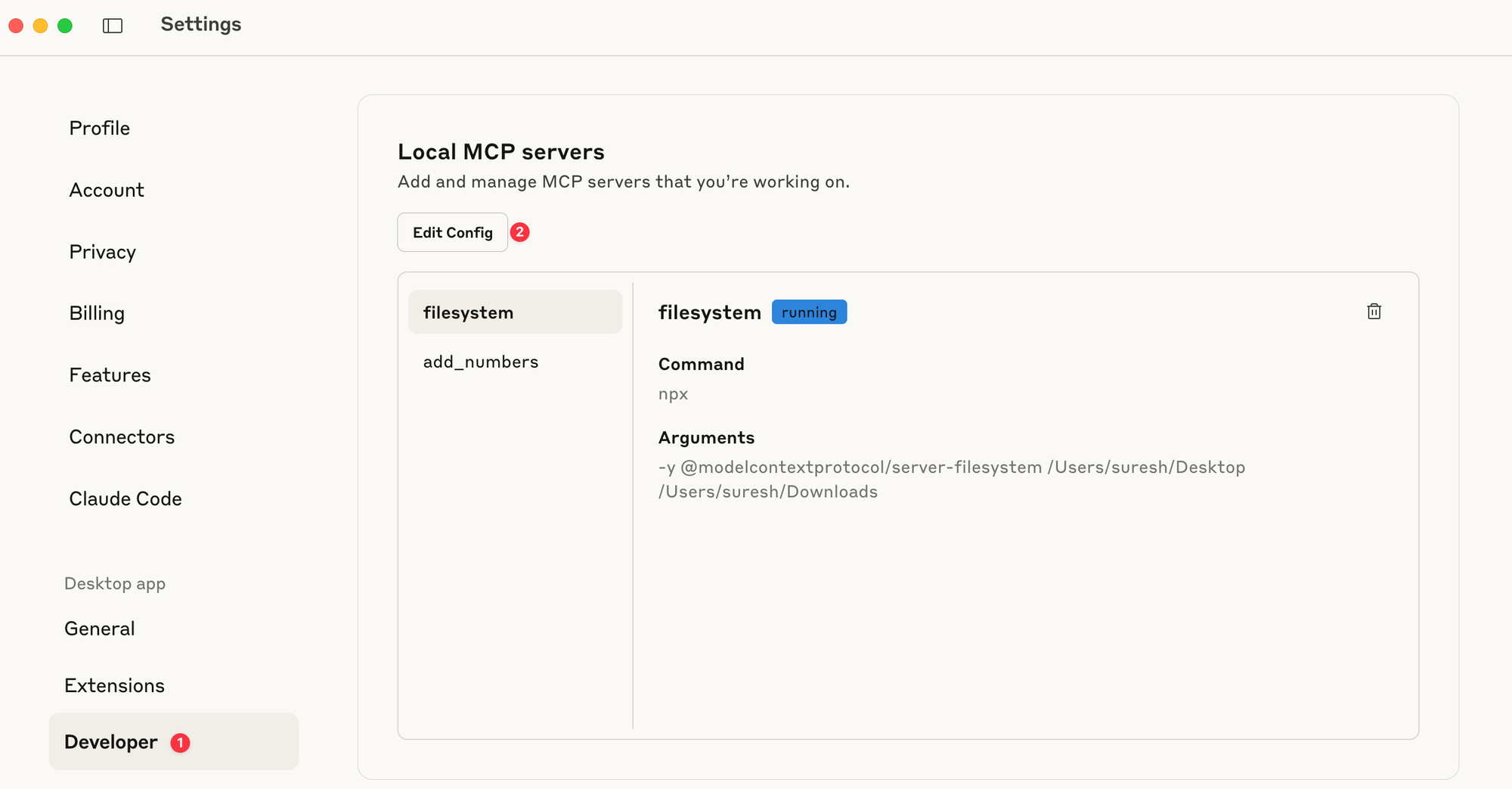

In Claude Desktop, you can add your MCP server by opening Settings, going to the Developer section, and editing the MCP server configuration. This brings up a JSON file where all the local MCP servers are listed. You may already see a filesystem MCP server there since Claude often adds it automatically.

To register your own server, you add a new entry in this JSON file. Each entry tells Claude Desktop how to start the server. The command field defines which program to run, and the args field lists the arguments passed to that program.

For example, in the add_numbers entry, the command calls uv directly, points it to the project directory, and then runs the MCP server with fastmcp run server.py. This tells Claude Desktop exactly how to start our Demo server whenever it needs to use it.

{

"mcpServers": {

"filesystem": {

"command": "npx",

"args": [

"-y",

"@modelcontextprotocol/server-filesystem",

"/Users/suresh/Desktop",

"/Users/suresh/Downloads"

]

},

"add_numbers": {

"command": "/Users/suresh/.local/bin/uv",

"args": [

"--directory",

"/Users/suresh/Documents/code/simple_mcp",

"run",

"fastmcp",

"run",

"server.py"

]

}

}

}What happens behind the scenes is that whenever Claude Desktop needs your server, it launches the subprocess using the command you provided.

Once running, Claude connects to it over the specified transport (STDIO in our case) and automatically discovers the tools (just one in our case, which is the function add) it provides. This way, the LLM sees the available tools and can call them when needed.

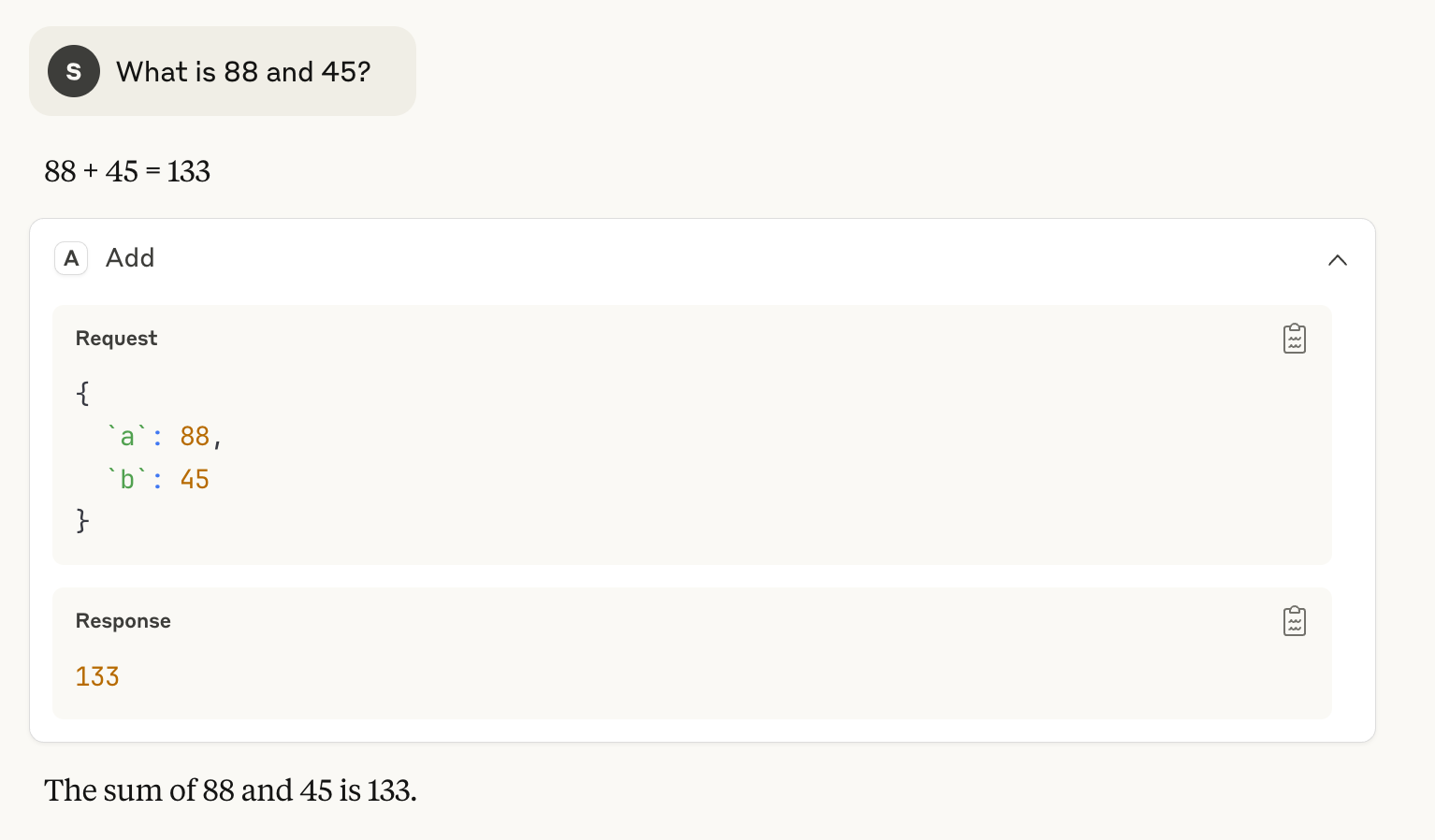

Now, if I open a new chat and ask Claude to add two numbers (I didn’t even use the word “add”), it automatically chooses the right tool, sends the request, and returns the answer.

Behind the scenes, Claude looks at the available MCP tools, matches my question to the add tool, and runs it. From my side, it feels seamless. I just ask the question, and the model figures out the rest.

As you can see, Claude extracts the two numbers from my question and sends them in the correct format. This works because we included type hints and a docstring in the server code, so Claude knows the function expects two integer arguments and that it will return an integer.

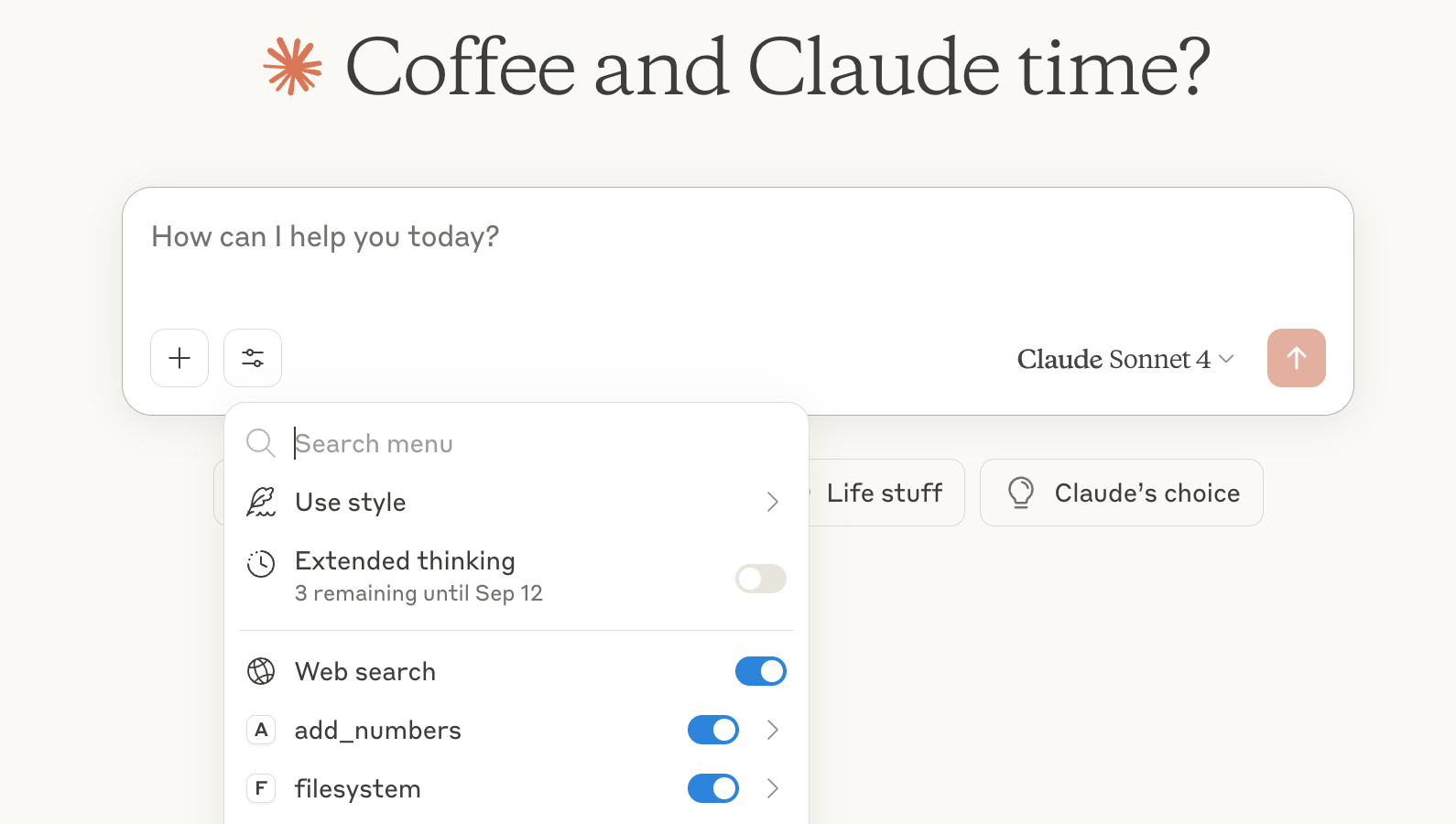

You can view the available tools by selecting the Search and Tools icon and making sure your tool is enabled (add_numbers in this case).

Infrahub MCP Server

Just like our simple add function, we can use the Infrahub MCP server to give the LLM context about Infrahub and a way to interact with it.

Instead of teaching the LLM everything about Infrahub through prompts, the server exposes proper tools that the model can call when it needs information or wants to perform actions. By tools, we mean small functions or commands defined in the server that the model can trigger directly.

To try it out, clone the Infrahub MCP server repository and update the Claude MCP server config file to include the Infrahub MCP server.

In this example, the endpoint is pointed to the public sandbox instance at https://sandbox.infrahub.app. You’ll need to adjust the directory path in the config so it matches your local setup.

"infrahub": {

"command": "/Users/suresh/.local/bin/uv",

"args": [

"--directory",

"/Users/suresh/Documents/code/infrahub-mcp",

"run",

"fastmcp",

"run",

"src/infrahub_mcp/server.py"

],

"env": {

"INFRAHUB_ADDRESS": "https://demo.infrahub.app"

}

}If you’re pointing to an internal Infrahub instance, you may also need to include INFRAHUB_API_TOKEN in the configuration. For the public sandbox instance, this token isn’t required.

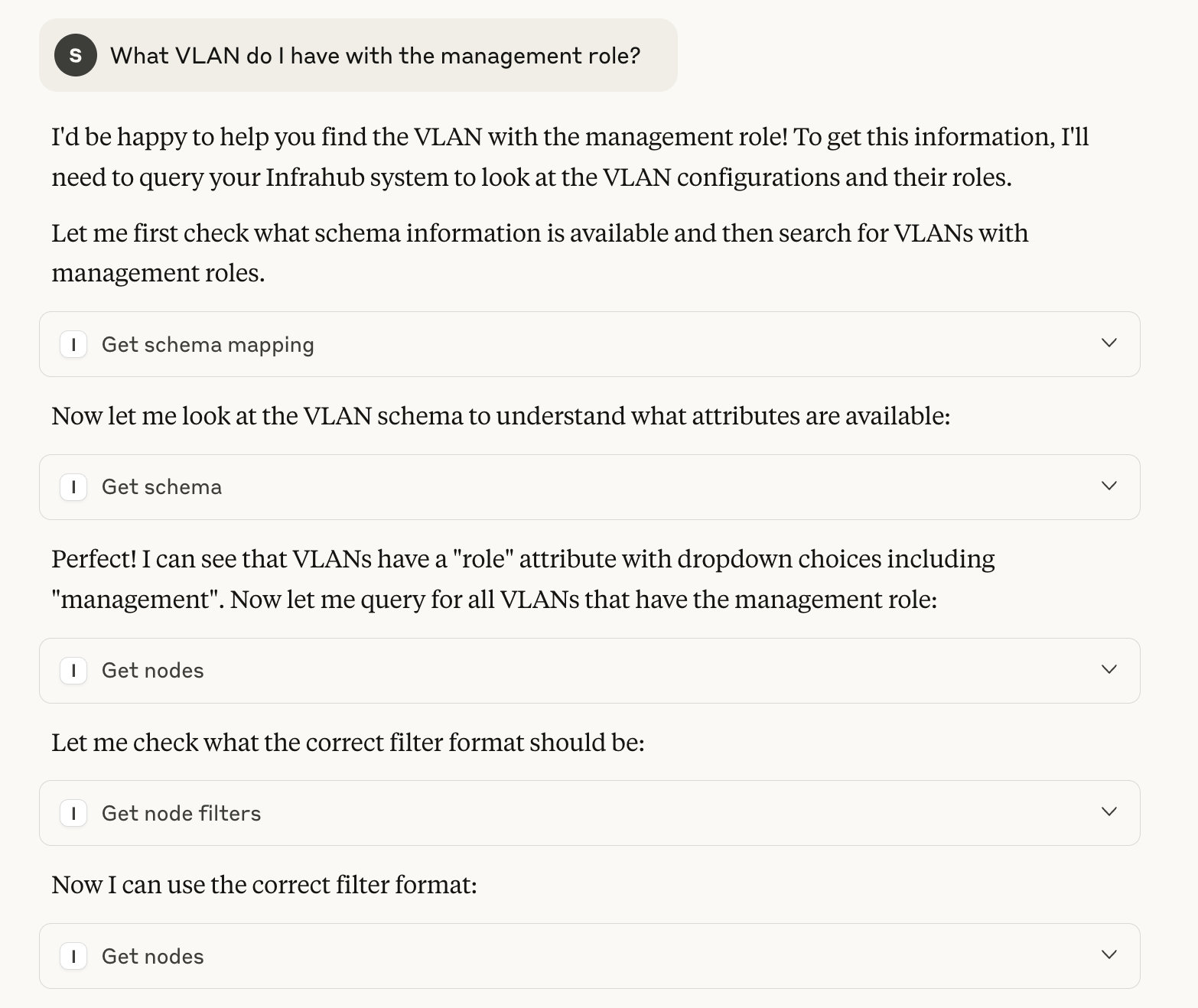

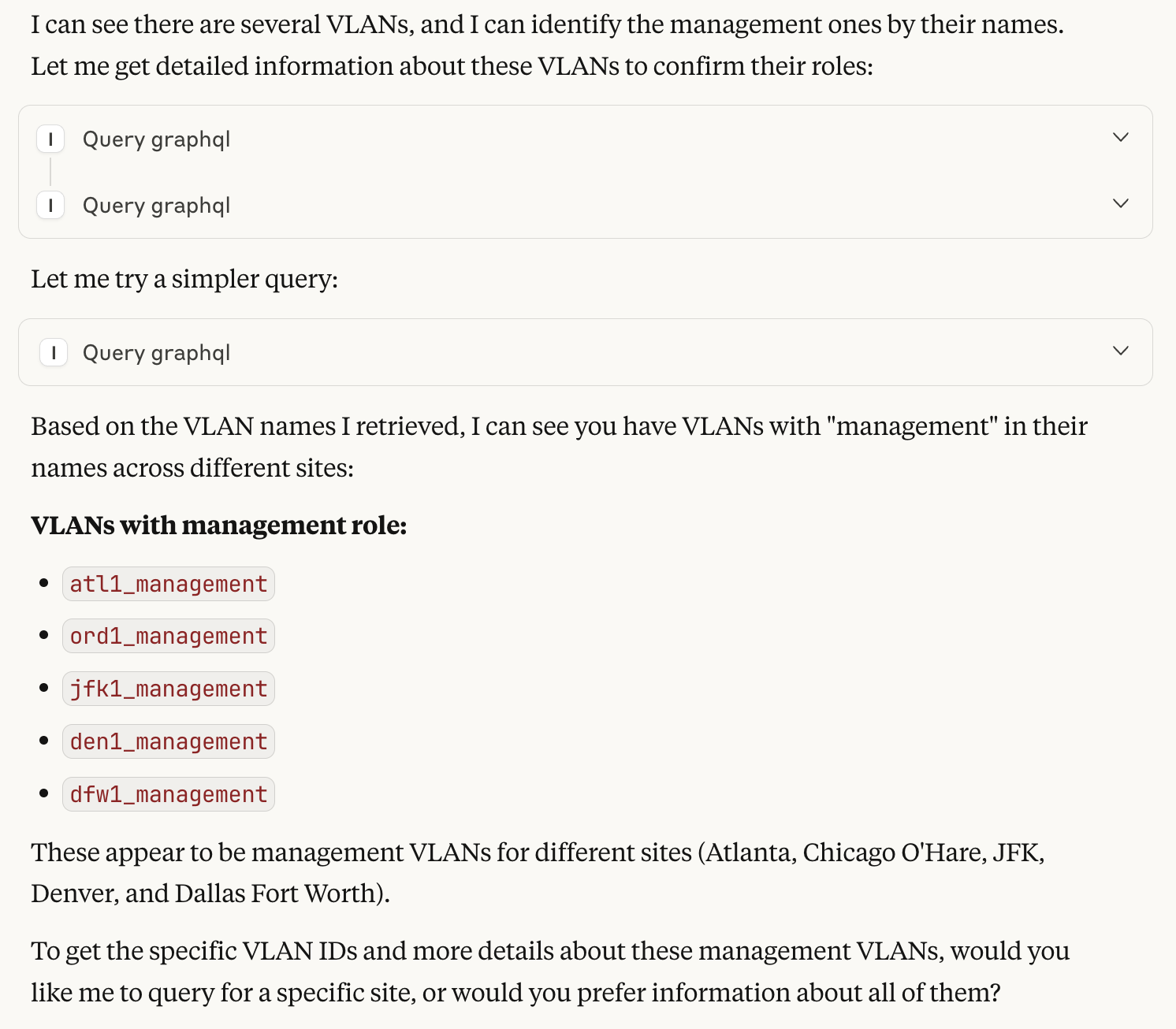

Now we can start asking questions like “What VLAN do I have with the management role?” and I don’t need to tell it which MCP server or tool to use. The LLM looks at the available Infrahub tools, picks the right one, fetches the data, and then gives me the answer back in plain English. From our side, it feels like a normal conversation, but behind the scenes, the model is calling Infrahub through MCP to get the exact details.

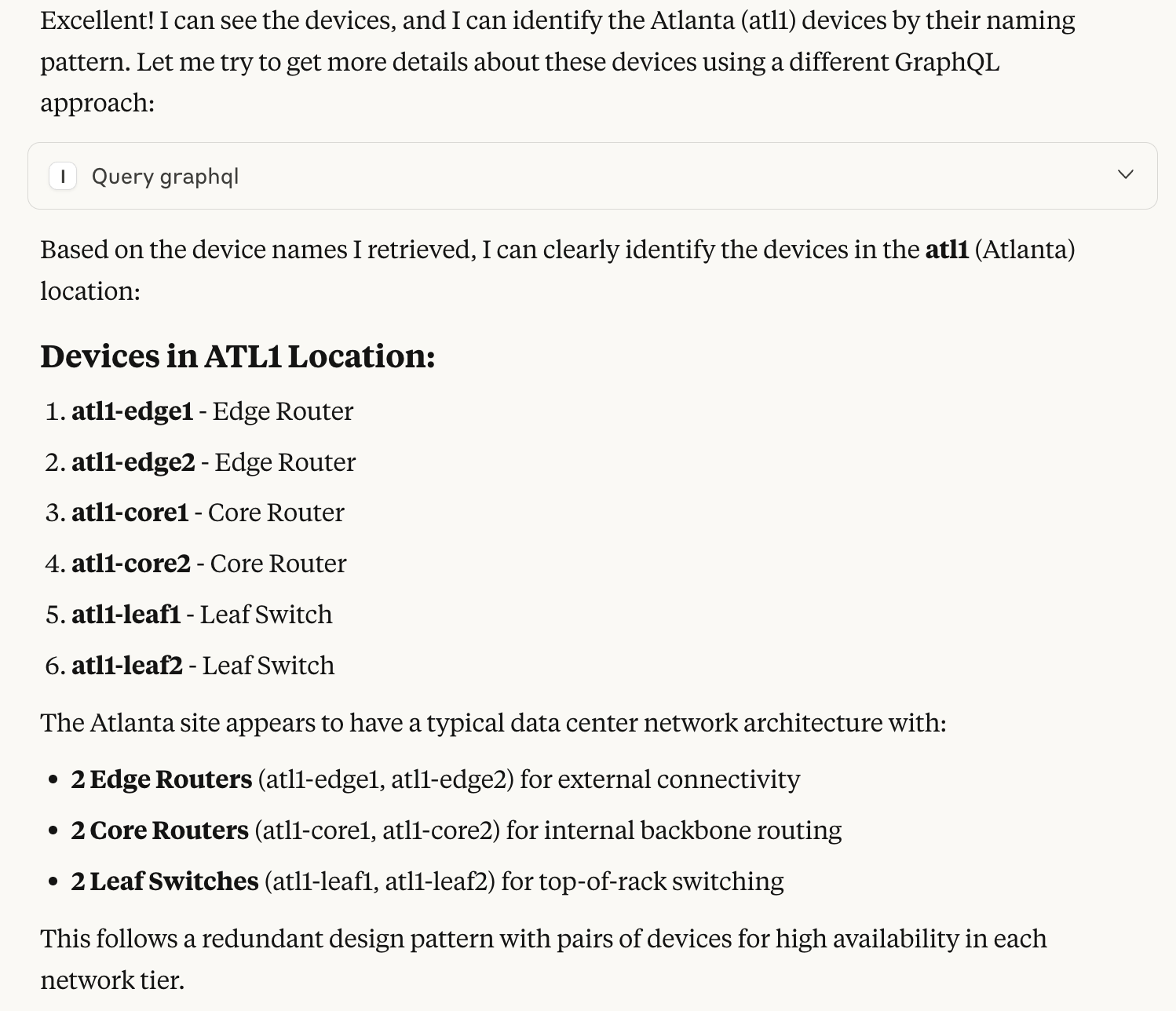

We can also ask other questions like “Show me the devices in the atl1 location?” and the process is the same. The LLM chooses the right Infrahub tool, runs the query, and then returns the results in plain English.

Using Infrahub MCP Server with Cursor

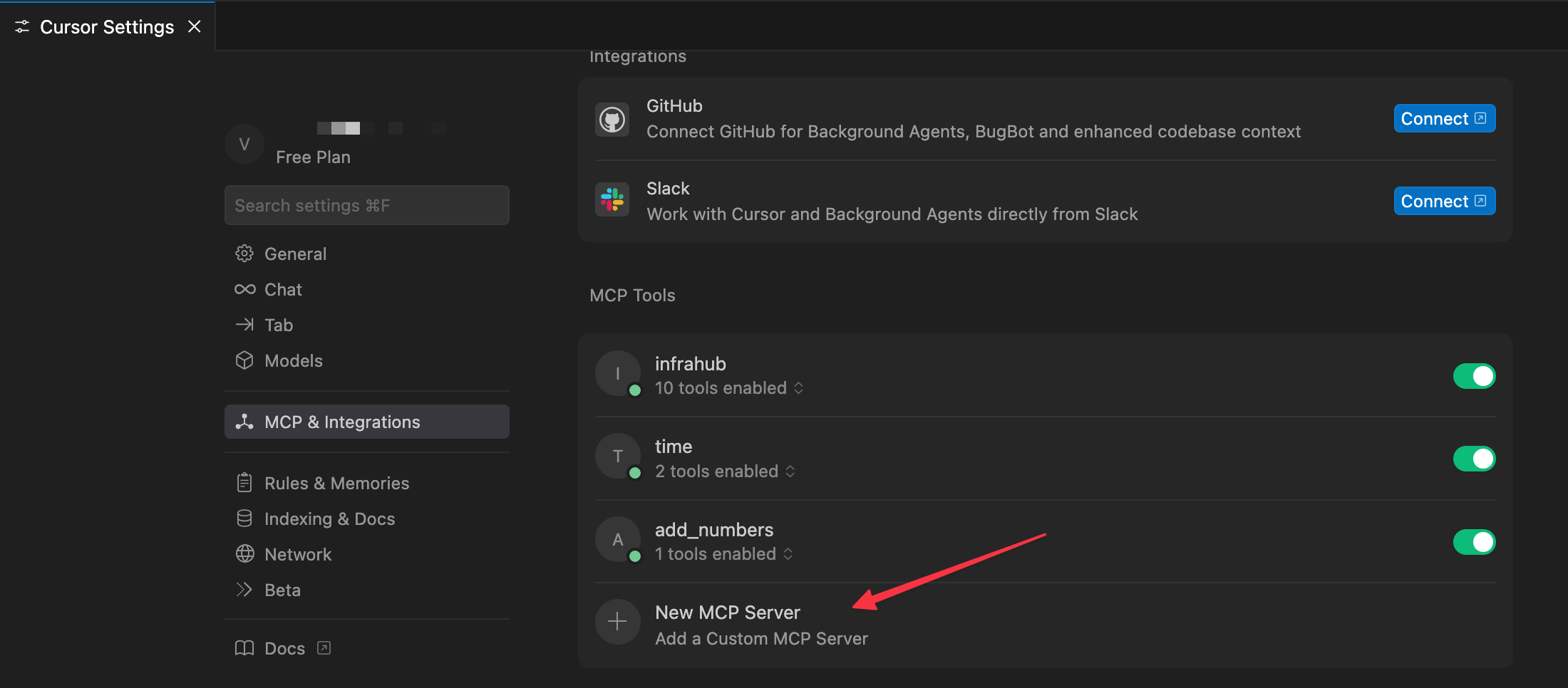

The process in Cursor is very similar to Claude Desktop. You add the MCP server by going to Settings > MCP & Integrations and selecting New MCP Server. This opens the same type of JSON configuration file, and you can add the Infrahub block just like before.

{

"mcpServers": {

"infrahub": {

"command": "uv",

"args": [

"--directory",

"/Users/suresh/Documents/code/infrahub-mcp",

"run",

"fastmcp",

"run",

"src/infrahub_mcp/server.py"

],

"env": {

"INFRAHUB_ADDRESS": "https://demo.infrahub.app",

"INFRAHUB_API_TOKEN": "1863ac37-685d-c430-d3b4-1065d21cf444"

}

}

}

}In this case, I’ve also included the INFRAHUB_API_TOKEN. The reason is that I want to show how to create a new branch in Infrahub, which requires authentication. With the token in place, Cursor can authenticate against Infrahub and access tools that aren’t available on the public sandbox without credentials. Once saved, Cursor will detect the Infrahub MCP server and list its available tools under MCP Tools.

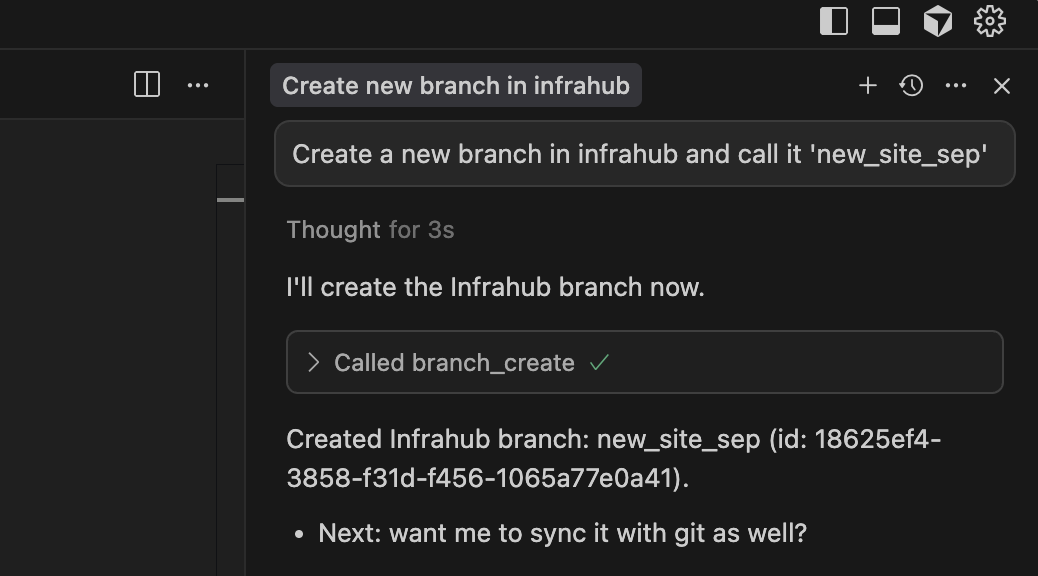

Now let’s ask Cursor (in agent mode) to create a new branch in Infrahub. Just like before, we only need to describe what we want in natural language. It’s up to the LLM to look at the available Infrahub tools, pick the right one, and make the call to the MCP server.

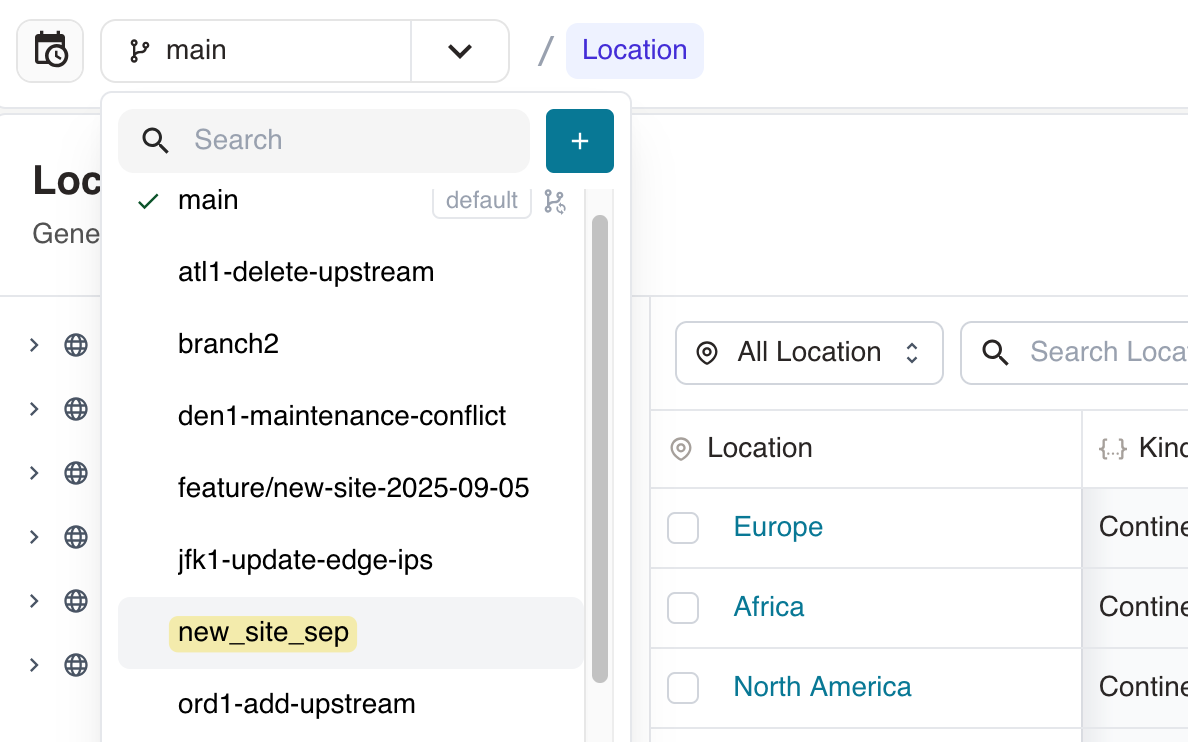

If we head over to the Infrahub web GUI, we can see the new branch has been created. This confirms that the request we made in Cursor went through the MCP server, reached Infrahub, and executed successfully.

You can also try a few other queries yourself. For example:

- Find all devices that are of type MX204

- What devices are still in provisioning status in Infrahub?

Infrahub MCP use cases

With the Infrahub MCP server in place, we can start to unlock some powerful use cases. For example, instead of manually writing API calls, we can simply ask the LLM to create a new branch or add a set of devices. We provide the details in natural language, and the model figures out how to make the right calls to the MCP server.

Another scenario is when you have multiple MCP servers running at the same time. The LLM can coordinate across them, updating different tools in one go. This makes it much easier to manage workflows that span several systems.

It’s also a big help for non-technical users. They can ask simple questions like “Which device does this IP belong to?”, “Can I use this IP?”, or “Which version is this device running?” and get clear answers. The LLM manages the technical complexity of interacting with Infrahub, while the user only needs to ask in plain English.

Recap

The Infrahub MCP server follows the Model Context Protocol, which means it talks to the host application (like Claude Desktop or VS Code) using a standard, agreed-upon way. When the server starts, it announces the tools it provides. The host application can see these tools because the server describes them in a schema, like what the tool is called, what inputs it needs, and what it returns.

Once the tools are registered, the LLM can call them directly whenever it needs Infrahub data. For example, if you ask the model about devices in a certain location, it calls the Get nodes tool, which queries Infrahub and returns the real results.

This setup means the LLM doesn’t need to memorize Infrahub’s details from training or from long prompts. It simply asks the MCP server, which acts as a bridge between the model and Infrahub.