Recently, I faced a unique challenge, I needed to export a massive amount of traffic logs from a Palo Alto Firewall for analysis. Initially, I thought it would be straightforward, log into the GUI, apply the necessary traffic log filter, and export the logs as a CSV file. Easy peasy, right? Well, not exactly. I quickly ran into a roadblock that made me rethink my approach.

In this blog post, I'll share the hurdles I encountered and how I managed to find a workaround to export the logs and analyze them using Python Pandas.

The Problem

By default, Palo Alto only exports 65535 rows in the CSV file, which is not nearly enough. If you have a large network, that amount might only cover a few minutes of logs. Even if you change the value, the maximum it can support is 1048576, which might cover maybe an hour's worth of logs. But for my use case, I needed at least a month of logs. I couldn't get what I wanted from the built-in report options, so I was scratching my head. I then tried to export the logs via SCP on the CLI, but again encountered the same maximum row limitations.

Export to an FTP Server

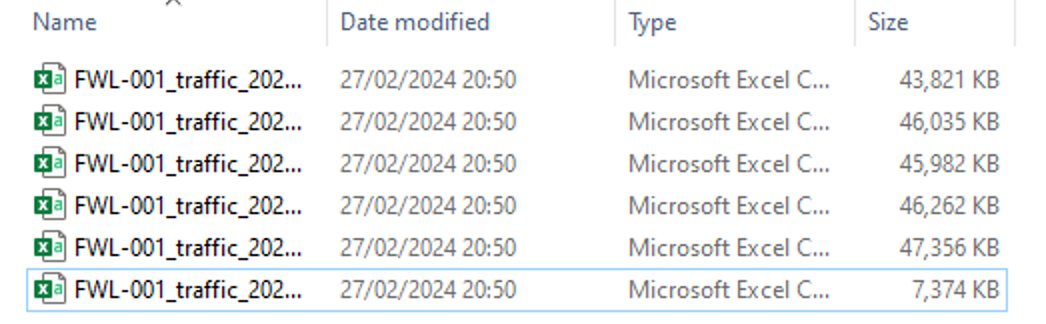

Then, for some reason, I thought, let's try via the FTP server, and bingo, that worked. With FTP, what happens is, if the CSV file hits the row limit, it just creates another file and continues this process. So, this time, at least I was able to get all the logs spread across multiple files. I think I ended up with around 20 or so files, each ranging in size from 200-300 MB.

ftp export log traffic query "addr.src in 10.10.1.0/24" start-time equal 2024/02/23@01:00:00 end-time equal 2024/02/27@23:59:00 to username:password@192.168.10.10

Cleaning up the Files

The next step is cleaning up a large volume of log files exported from a Palo Alto firewall to an FTP server. Imagine having over 20 files, each filled with detailed logs and each file being around 200-300 MB in size. The sheer volume and size make manual cleanup a challening, if not impossible task. And let's not even get started on trying to open these massive files in Excel.

So, I decided to use a bit of Python magic, specifically with the Pandas library, which is amazing for working with data.

import os

import pandas as pd

path_of_the_directory = "C:\\ftp"

for file in os.listdir(path_of_the_directory):

f = os.path.join(path_of_the_directory,file)

df = pd.read_csv(f, on_bad_lines='skip')

df = df[['Receive Time', 'Source Zone', 'Destination Zone', 'Source address', 'Destination address', 'IP Protocol', 'Destination Port', 'Application', 'Action', 'Rule']]

df.drop_duplicates(subset=df.columns.difference(['Receive Time']), inplace=True)

df.to_csv('cleaned_up.csv', mode='a', header=False, index=False)

df = pd.read_csv('cleaned_up.csv')

df.columns = ['Receive Time', 'Source Zone', 'Destination Zone', 'Source address', 'Destination address', 'IP Protocol', 'Destination Port', 'Application', 'Action', 'Rule']

df.drop_duplicates(subset=df.columns.difference(['Receive Time']), inplace=True)

df.to_csv('final.csv', index=False)Firstly, the script uses the os library to navigate through the directory where the log files are stored (C:\ftp). This approach is important because specifying each file name manually is not feasible when dealing with a large number of files. By iterating over each file in the specified directory, the script ensures that each and every file is processed.

Once a file is identified, the script reads it into a Pandas DataFrame. The script then filters the DataFrame to include only the columns of interest such as source and destination IPs, zones, rule name, etc.

The script then removes duplicate entries based on all columns except 'Receive Time.' This step is repeated for each file, with the cleaned data appended to a single consolidated file, cleaned_up.csv. In the final step, the script loads this consolidated file, applies a final deduplication (to ensure no duplicates were introduced during the append process), and saves the final clean dataset to final.csv.

Now, I've ended up with cleaned-up data, with the file size trimmed down to just a few MBs. If I ever need to tackle the same task again, all I have to do is export the logs and rerun the script. This simple yet effective method has made managing large volumes of data not just possible, but easy and efficient.